The AI landscape just experienced a seismic shift. China’s Moonshot AI has released Kimi K2, an open-source powerhouse that’s not just competing with closed-source giants like GPT-4 and Claude 4 Sonnet—it’s actually beating them on multiple benchmarks. With 1 trillion parameters and revolutionary agentic capabilities, this model is redefining what’s possible in open-source AI.

1. What Makes Kimi K2 Different?

Forget everything you think you know about AI chatbots. Kimi K2 isn’t just another language model—it’s an agentic AI system designed to actually do things, not just talk about them.

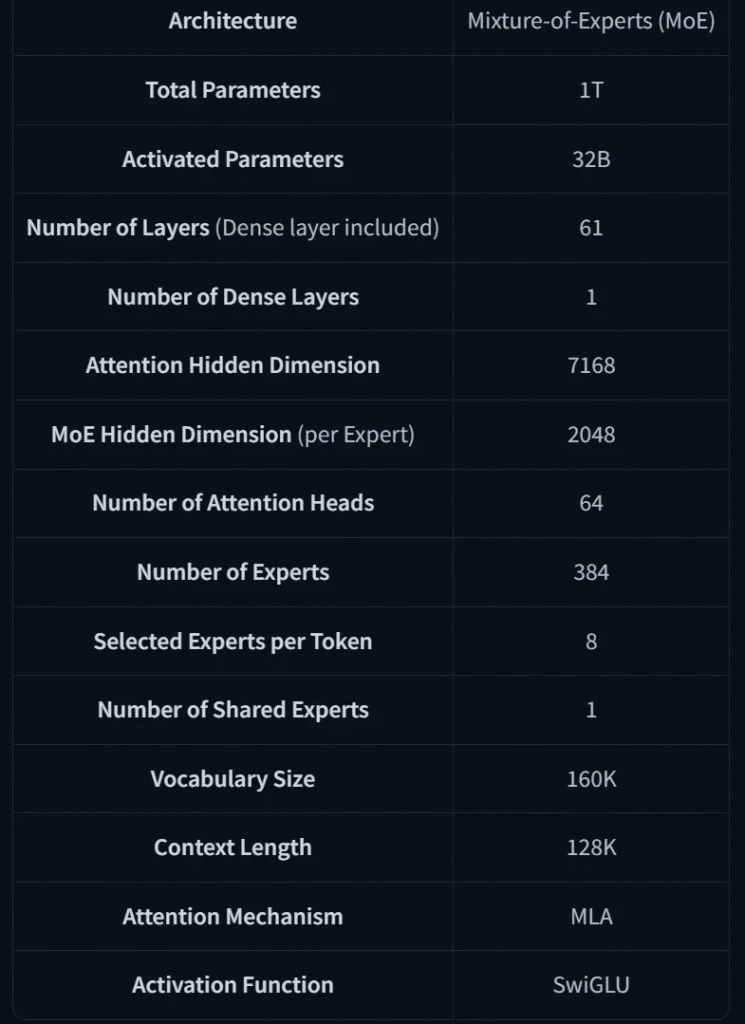

Key Specifications:

- 1 trillion total parameters with 32 billion active per inference

- Mixture-of-Experts (MoE) architecture for efficient scaling

- 128,000 token context window

- Completely open-source and free to use

- Agentic intelligence – executes code, manages files, uses tools autonomously

When you ask Kimi K2 to “analyze salary trends for remote vs. onsite jobs,” it doesn’t give you a blog post. It gives you statistical analysis, interactive visualizations, and actionable insights—all through 15+ autonomous tool calls.

2. Revolutionary Architecture: Built for Action, Not Just Chat

Kimi K2 is a Mixture-of-Experts (MoE) transformer built to scale large, run efficiently, and execute agentic tasks instead of just chatting.

Base Structure

- Mixture-of-Experts (MoE): The model has a total of 1 trillion parameters, but only 32 billion are active during any single inference. This means it selectively routes tokens through only a few expert sub-networks at a time, keeping compute costs lower.

- Sparse Activation: Not all parts of the network are used for every input. Only the top-k experts per layer are active, likely using top-2 routing.

- Fewer Attention Heads: The model reduces the number of attention heads compared to standard transformer models. This helps in long-context scenarios by keeping computations more focused and stable.

Optimizer: MuonClip

Most large models use AdamW. Kimi K2 doesn’t.

The MuonClip optimizer is particularly noteworthy—it prevents “logit explosions” in attention mechanisms that plague other large MoE models, ensuring stable training across 15.5 trillion tokens.

- It uses MuonClip, a custom optimizer derived from Moonlight.

- The goal is training stability at scale. Large MoE models often suffer from exploding attention values.

- To fix this, Kimi K2 applies qk-clip — it rescales the weights in the query and key matrices during training. This keeps attention scores from blowing up without degrading performance.

Training Setup

- Trained on 15.5 trillion tokens

- Pretraining was smooth — no major loss spikes or breakdowns

- The architecture was tuned specifically for token efficiency, since high-quality human data is getting harder to scale

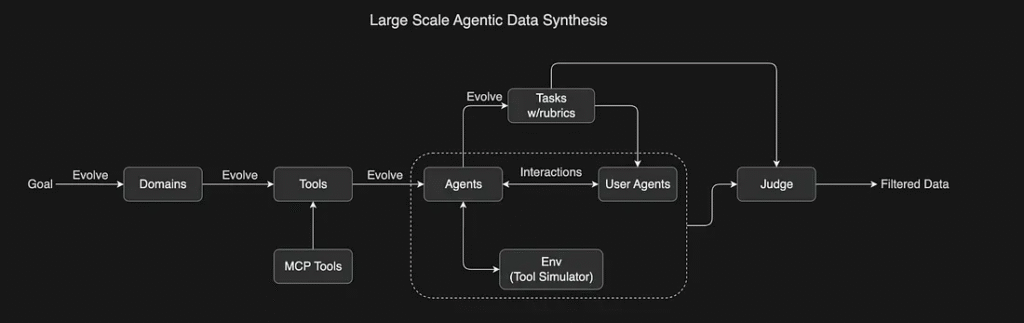

Agentic Capabilities (Post-training Layer

After pretraining, Kimi K2 goes through a different kind of training focused on doing, not just knowing.

- Tool-use Simulation: It learns by simulating thousands of tool-use tasks across hundreds of domains. These include real tools (APIs, shells, databases) and synthetic ones.

- Rubric-Based Evaluation: A self-judging mechanism grades outputs using rubrics — this allows the model to improve even for tasks that don’t have a clear “right” answer (like writing or analysis).

- Reinforcement Learning: The model fine-tunes itself using both verifiable rewards (like solving a math problem) and non-verifiable ones (like writing a good summary), updating its critic as it learns.

Real Tasks, Real Output

This isn’t a demo model. It works in the wild. Want a few use cases?

- You tell it: “Compare salary differences across remote vs on-site jobs from 2020–2025.” It gives you: Violin plots, bar charts, ANOVA tests, t-tests, and an HTML dashboard you can deploy.

- You ask: “Plan my Coldplay tour in London.” It opens flight search, books Airbnb, checks the schedule, builds an itinerary, and gives you a trip summary — all by calling tools on its own.

- You type in: “Convert my Flask app to Rust.” It rewrites the code, benchmarks it, and gives you a report.

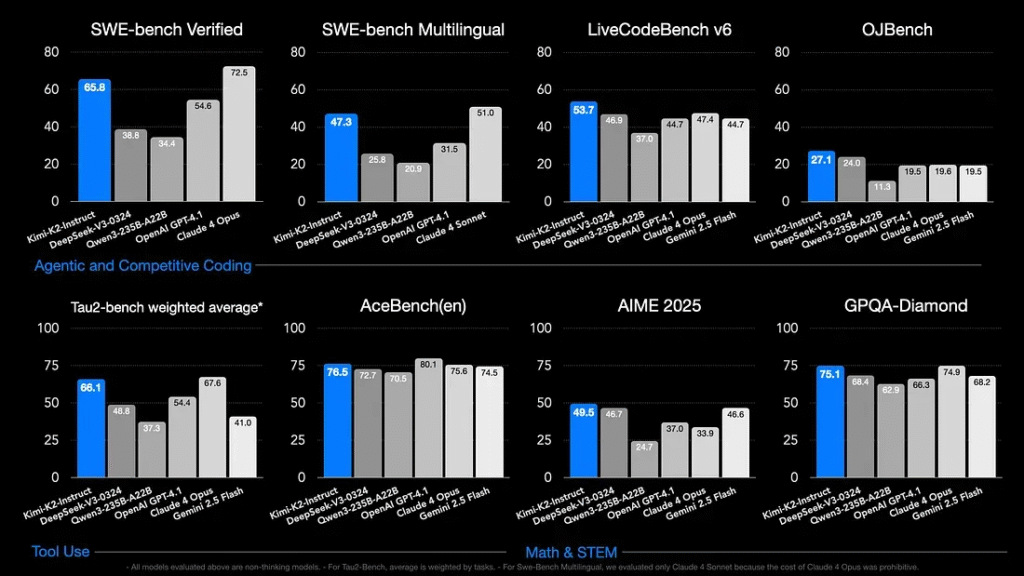

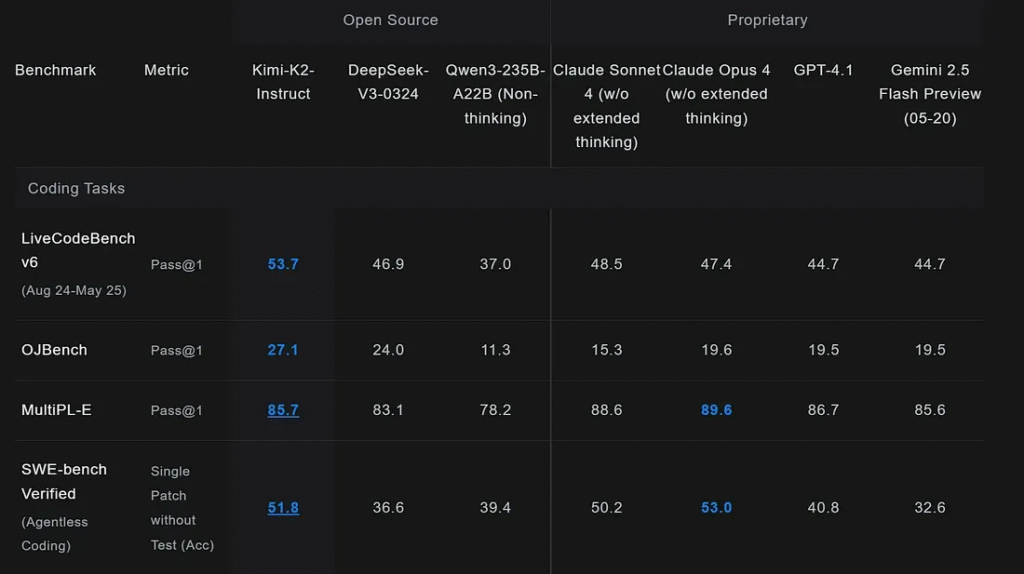

3. Benchmark Domination: The Numbers Don’t Lie

Kimi K2 isn’t just good—it’s consistently outperforming industry leaders across multiple evaluation metrics.

Coding Excellence

SWE-bench Verified (Real-world bug fixing):

- Kimi K2-Instruct: 65.8%

- DeepSeek-V3: 36.6%

- Qwen-2.5-Coder: 34.4%

LiveCodeBench v6 (Competitive programming):

- Kimi K2-Instruct: 53.7%

- DeepSeek-V3: 46.9%

- Claude 4 Sonnet: 47.4%

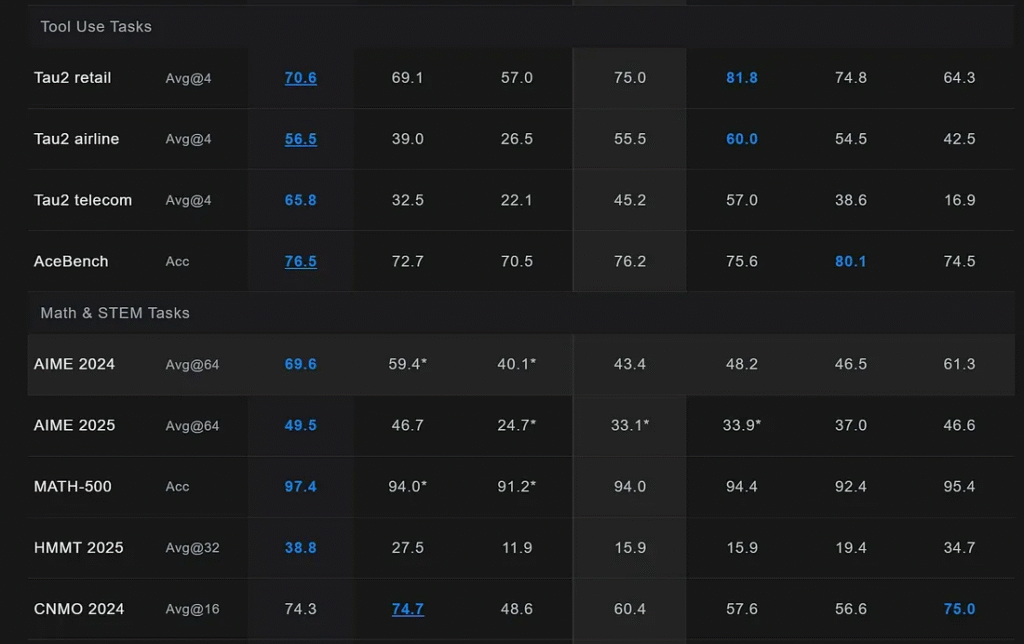

Mathematical Reasoning

AIME 2025 (Advanced mathematics):

- Kimi K2-Instruct: 49.5%

- DeepSeek-V3: 46.7%

- Claude 4 Sonnet: 33.9%

MATH-500 (Problem-solving accuracy):

- Kimi K2-Instruct: 97.4%

- DeepSeek-V3: 94.0%

- Claude 4 Opus: 94.4%

Agentic Task Performance

Tau2-bench (Tool usage):

- Kimi K2-Instruct: 68.1%

- DeepSeek-V3: 48.8%

- Claude 4 Opus: 41.0%

4. Two Variants for Every Need

Moonshot AI strategically released two versions to serve different use cases:

Kimi-K2-Base

Perfect for researchers and developers who need:

- Raw, unrefined model for fine-tuning

- Complete control over customization

- Foundation for specialized applications

- Research into model behavior

Kimi-K2-Instruct

Ideal for immediate deployment with:

- Pre-trained for chat and agentic tasks

- Optimized for production environments

- Ready-to-use tool integration

- Seamless API compatibility

5. Real-World Agentic Capabilities

This isn’t a demo model—it’s production-ready AI that actually works. Here’s what Kimi K2 can do autonomously:

Business Intelligence

- Analyze large datasets with statistical rigor

- Generate comprehensive reports with visualizations

- Perform hypothesis testing and correlation analysis

- Create interactive dashboards

Software Development

- Debug existing codebases across multiple languages

- Convert applications between programming frameworks

- Optimize code performance and suggest improvements

- Generate complete applications from requirements

Content Creation

- Design interactive web applications

- Create data visualizations and infographics

- Generate SVG illustrations and graphics

- Build responsive user interfaces

6. Multiple Access Options: From Free to Self-Hosted

Free Web Interface

- Access through kimi.com

- No subscription required

- Full agentic capabilities

- User-friendly interface

API Access

Free Tier Includes:

- 32,000 tokens per minute

- 3 requests per minute

- 1.5 million tokens per day

- OpenAI-compatible format

Competitive Pricing:

- Input: $0.60 per million tokens

- Output: $2.50 per million tokens

- Significantly cheaper than GPT-4 and Claude

Self-Hosting Options

- Complete model weights on Hugging Face

- Compatible with vLLM, SGLang, and TensorRT-LLM

- Full control over data and privacy

- No vendor lock-in

7. Getting Started: Three Simple Ways

Option 1: Web Interface

- Visit kimi.com

- Start chatting immediately

- Access all features instantly

Option 2: API Integration

python :

import openai

client = openai.OpenAI(

api_key="your-kimi-api-key",

base_url="https://api.moonshot.cn/v1"

)

response = client.chat.completions.create(

model="kimi-k2-instruct",

messages=[

{"role": "user", "content": "Analyze this dataset and create visualizations"}

]

)Option 3: Self-Hosting

- Download weights from Hugging Face

- Set up inference engine (vLLM recommended)

- Configure hardware (64GB+ RAM recommended)

- Deploy on your infrastructure

8. Current Limitations and Future Roadmap

Known Limitations

- No vision capabilities (text-only currently)

- 128K token context limit

- Tool definition sensitivity (requires clear specifications)

- Verbose output for complex reasoning tasks

Future Developments

- Multimodal capabilities (vision and image processing)

- Extended context windows

- Improved reasoning chains

- Domain-specific variants

9. Why Kimi K2 Matters for the AI Industry

Kimi K2 represents more than just another AI model—it’s proof that open-source AI can match and exceed proprietary alternatives. This democratization of advanced AI capabilities has profound implications:

For Developers

- Access to state-of-the-art AI without subscription costs

- Complete control over deployment and customization

- No vendor lock-in or usage restrictions

- Transparent model behavior and limitations

For Researchers

- Open weights enable deep investigation

- Novel training techniques can be studied and replicated

- Benchmarks can be independently verified

- Foundation for future AI research

For the Industry

- Accelerates overall AI progress through open collaboration

- Reduces barriers to AI adoption

- Challenges the dominance of closed-source models

- Promotes innovation through accessibility

10. The Future of AI is Open

Kimi K2 isn’t just competing with GPT and Claude—it’s redefining what’s possible in open-source AI. With its revolutionary agentic capabilities, state-of-the-art performance, and complete accessibility, it represents a fundamental shift toward democratized AI.

The gap between open-source and proprietary models isn’t just narrowing—it’s disappearing. Kimi K2 proves that the future of AI belongs to models that are not only powerful but also accessible, transparent, and free.