1. What is Context Engineering?

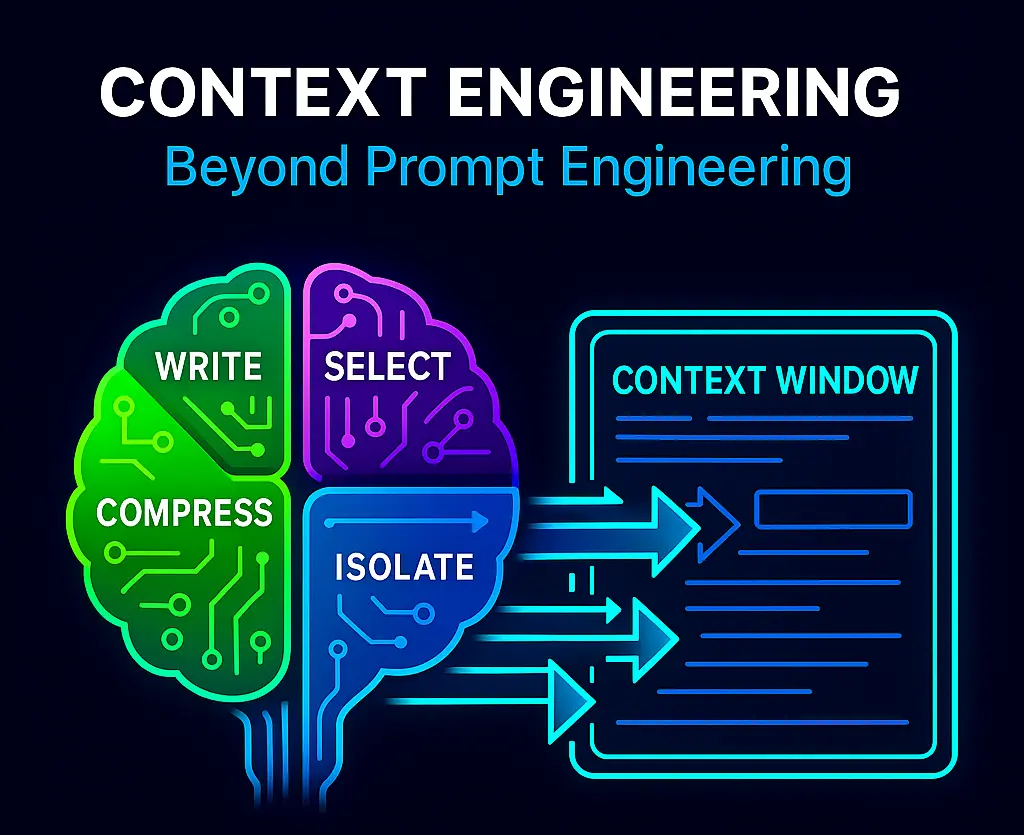

Context engineering is the discipline of designing and building dynamic systems that provides the right information and tools, in the right format, at the right time, to give a LLM everything it needs to accomplish a task. Unlike traditional prompt engineering, which focuses on crafting static instructions, context engineering manages the entire information ecosystem around AI models.

Think of it this way: LLMs are like a new kind of operating system. The LLM is like the CPU and its context window is like the RAM, serving as the model’s working memory. Context engineering acts as the memory management system, ensuring optimal performance.

graph TD

A[User Input] --> B[Context Window]

C[Knowledge Base] --> B

D[Memory Systems] --> B

E[Tools & Functions] --> B

F[Instructions] --> B

B --> G[LLM Processing]

G --> H[Optimized Output]

H --> I[Context Update]

I --> B2. Why Context Engineering Matters in 2025

The AI landscape has evolved dramatically. Context Engineering is the new skill in AI. It is about providing the right information and tools, in the right format, at the right time. Here’s why it’s crucial:

Beyond Static Prompts

A System, Not a String: Context isn’t just a static prompt template. Modern AI applications require dynamic, adaptive context management that evolves with user interactions.

Agent-Centric AI

In long-running agents, context is not a prompt. It’s a runtime system that manages:

- Instructional knowledge: How the agent behaves

- Operational knowledge: What the agent knows

- Retrieved knowledge: What the agent has done recently

Enterprise-Scale Requirements

This is becoming very critical for lot of enterprise solutions, where it’s not just about generating application code but scanning through various types of data to solve a complex enterprise problem.

3. The Four Pillars of Context Engineering

1. Write Context: Persistent Memory Management

Writing context involves saving critical information outside the immediate context window for future retrieval.

graph LR

A[Agent Action] --> B[Extract Key Info]

B --> C[Scratchpad Storage]

B --> D[Long-term Memory]

C --> E[Session Context]

D --> F[Cross-Session Context]Implementation Strategies:

- Scratchpads: Temporary storage for ongoing tasks

- Episodic Memory: Storing experiences and examples

- Procedural Memory: Saving instructions and learned procedures

- Semantic Memory: Maintaining facts and relationships

2. Select Context: Intelligent Information Retrieval

Context engineering is the art and science of filling the context window with just the right information at each step of an agent’s trajectory.

graph TD

A[User Query] --> B[Semantic Search]

B --> C[Relevance Scoring]

C --> D[Context Ranking]

D --> E[Top-K Selection]

E --> F[Context Window]

G[Knowledge Base] --> B

H[Memory Systems] --> B

I[Tool Descriptions] --> BKey Techniques:

- Embedding-based retrieval: Using vector similarity for context selection

- Hybrid search: Combining keyword and semantic search

- Context scoring: Ranking information by relevance and recency

- Tool selection: Choosing appropriate tools from available options

3. Compress Context: Efficient Information Density

Context compression maintains essential information while reducing token usage.

graph TD

A[Large Context] --> B[Summarization]

A --> C[Trimming]

A --> D[Hierarchical Compression]

B --> E[Condensed Context]

C --> E

D --> E

E --> F[Optimized Window]Compression Methods:

- Summarization: Creating concise overviews of longer interactions

- Selective trimming: Removing less relevant information

- Hierarchical compression: Multi-level information organization

- Token-aware optimization: Maximizing information density per token

4. Isolate Context: Preventing Information Interference

Context isolation prevents different information streams from interfering with each other.

graph TD

A[Main Agent] --> B[Context Router]

B --> C[Task-Specific Agent 1]

B --> D[Task-Specific Agent 2]

B --> E[Task-Specific Agent 3]

C --> F[Isolated Context 1]

D --> G[Isolated Context 2]

E --> H[Isolated Context 3]Isolation Techniques:

- Multi-agent architecture: Specialized agents with dedicated contexts

- Environment sandboxing: Isolated execution environments

- State management: Separating different types of information

- Context boundaries: Clear separation between different information domains

4. Advanced Context Engineering Techniques

Dynamic Context Adaptation

In long-running agents, context is not a prompt. It’s a runtime composed of instructional, operational, and retrieved knowledge, often dynamically stitched together.

graph TD

A[Context Monitor] --> B{Context Size Check}

B -->|>90% Full| C[Compression Trigger]

B -->|<90% Full| D[Continue Normal]

C --> E[Summarize Oldest]

C --> F[Trim Irrelevant]

E --> G[Updated Context]

F --> G

G --> H[Resume Processing]Long-Context Optimization

Infinite Retrieval and Cascading KV Cache push LLMs closer to human-like context handling—one zeroing in on key details, the other weaving a broader memory tapestry.

Advanced Techniques:

- Infinite Retrieval: Selective attention to key information in large contexts

- Cascading KV Cache: Hierarchical memory management

- Context streaming: Processing information in manageable chunks

- Attention optimization: Focusing on relevant information segments

Context Quality Assurance

what you are trying to achieve in context engineering is optimizing the information you are providing in the context window of the LLM. This also means filtering out noisy information, which is a science on its own, as it requires systematically measuring the performance of the LLM.

5. Common Challenges and Solutions

1. Context Poisoning

Problem: Errors propagate through context, affecting subsequent decisions.

Solution: Implement context validation and error correction mechanisms.

graph TD

A[Context Input] --> B[Validation Layer]

B --> C{Quality Check}

C -->|Pass| D[Add to Context]

C -->|Fail| E[Error Correction]

E --> F[Corrected Context]

F --> D2. Context Distraction

Problem: Lengthy context overwhelms the model’s training.

Solution: Implement attention mechanisms and context prioritization.

3. Context Confusion

Problem: Too much irrelevant information leads to poor decisions.

Solution: Use relevance scoring and context filtering.

4. Context Clash

Problem: Conflicting information creates contradictions.

Solution: Implement conflict resolution and information reconciliation.

6. Best Practices for Implementation

Start with Clear Objectives

Define what information is essential for your specific use case.

Implement Gradual Complexity

Begin with simple context management and add sophistication incrementally.

Monitor Performance Metrics

Track token usage, response quality, and context effectiveness.

Design for Scalability

Build systems that can handle growing context requirements.

Maintain Context Hygiene

Regularly audit and clean context to prevent information decay.

7. Tools and Frameworks for Context Engineering

LangGraph

- Thread-scoped memory management

- Multi-agent orchestration

- Built-in compression utilities

- State management for context isolation

Context-Specific Tools

- Provence: Trained context pruning

- MemoryBank: Enhanced memory systems

- MCP (Model Context Protocol): Dynamic context provision

8. Real-World Applications

Enterprise Knowledge Management

Context engineering enables organizations to build intelligent assistants that can navigate complex information landscapes.

Customer Support Systems

Dynamic context management allows support agents to maintain conversation history and user preferences across sessions.

Code Generation and Analysis

scanning through various types of data to solve a complex enterprise problem requires sophisticated context engineering.

Research and Development

Long-term research projects benefit from persistent context management across multiple sessions.

9. Future of Context Engineering

Emerging Trends

- Infinite context windows: Reducing immediate memory pressure

- Specialized architectures: Purpose-built systems for context management

- Automated context optimization: AI-driven context engineering

- Cross-modal context: Integrating text, images, and other data types

Expected Developments

- More sophisticated compression algorithms

- Better conflict resolution mechanisms

- Advanced attention mechanisms

- Improved multi-agent coordination

10. Conclusion

Context engineering represents a fundamental shift in how we interact with AI systems. context engineering will define how intelligent systems behave—and why you need to understand it now in order to understand AI agents, the evolution of AI job roles, and where AI is going .

As we move forward, the ability to effectively manage and optimize context will become increasingly critical for building robust, intelligent AI applications. Whether you’re developing enterprise solutions, research tools, or consumer applications, mastering context engineering will be essential for unlocking the full potential of AI systems.

The future belongs to those who can master this “delicate art and science” of context optimization. Start implementing these techniques today, and you’ll be well-positioned to lead in the AI-driven future.