Building AI agents that actually work in production isn’t about having the smartest model or the fanciest tools. It’s about mastering something far more subtle yet powerful: context engineering.

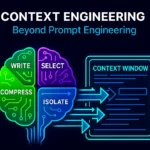

After analyzing millions of real-world agent interactions, one truth emerges consistently—context engineering is the discipline of designing and building dynamic systems that provides the right information and tools, in the right format, at the right time, to give a LLM everything it needs to accomplish a task. This isn’t just enhanced prompting; it’s a completely different approach to building intelligent systems.

Why Context Engineering Changes Everything

Traditional chatbots handle simple back-and-forth conversations. Agents? They’re operating complex state machines, executing dozens of operations, and maintaining context across extended interactions. This fundamental difference makes context engineering not just helpful—it’s absolutely critical.

graph TB

A[Traditional Chatbot] --> B[Single Exchange]

B --> C[Static Response]

D[AI Agent] --> E[State Management]

E --> F[Multi-Step Tasks]

F --> G[Dynamic Context]

G --> H[Tool Interactions]

H --> I[Extended Memory]

style D fill:#e3f2fd

style A fill:#fce4ecThe numbers tell the story: agents can process 50+ tool calls for a single task, creating input-to-output token ratios of 100:1 or higher. Traditional fine-tuning approaches crumble under this complexity. Context engineering thrives.

The Six Principles That Transform Agents

1. Design Around the KV-Cache

Here’s the uncomfortable truth: your KV-cache hit rate is the most important metric for production agents. Period.

Key-Value caching helps speed up the process by remembering important information from previous steps, and can reuse shared prompt prefixes across requests. The financial impact? Cached tokens cost 10x less than uncached ones.

flowchart LR

A[User Input] --> B[Agent Context]

B --> C[KV Cache Check]

C -->|Hit| D[Fast Response]

C -->|Miss| E[Full Computation]

E --> F[Update Cache]

F --> D

G[Cache Efficiency Factors]

G --> H[Stable Prefixes]

G --> I[Append-Only Context]

G --> J[Strategic Breakpoints]

style C fill:#e8f5e8

style D fill:#e8f5e8The Cache Killers to Avoid:

- Timestamps precise to the second in system prompts

- Dynamic system messages that change based on current state

- Non-deterministic serialization of configuration objects

What Works:

- Use deterministic serialization everywhere

- Keep prompt prefixes absolutely stable

- Design append-only context structures

2. Mask, Don’t Remove – Smart Action Management

As agents gain capabilities, their action spaces naturally expand. User-configurable tools can easily lead to hundreds of available functions, causing models to select suboptimal actions or take inefficient paths. The natural instinct is to dynamically add or remove tools, but this approach creates two critical problems:

- Cache Invalidation: Tool definitions typically appear early in context, so any modification invalidates the cache for all subsequent content

- Reference Confusion: When previous actions reference tools no longer in the current context, models become confused and may hallucinate or violate schemas

graph LR

A[All Available Tools] --> B[Context-Aware State Machine]

B --> C[Logit Masking]

C --> D[Constrained Action Selection]

E[Current State] --> B

F[Task Context] --> B

style B fill:#fff3e0

style C fill:#fff3e0Implementation Strategy

Instead of removing tools, implement context-aware masking using response prefill capabilities. Most model providers support three modes of function calling:

- Auto Mode: Model may or may not call a function

- Required Mode: Model must call a function, choice unconstrained

- Specified Mode: Model must call from a specific subset

By designing action names with consistent prefixes (e.g., browser_navigate, browser_click, shell_execute, shell_read), you can easily constrain action selection to specific tool groups without modifying tool definitions.

3. File System as Extended Memory

Modern language models offer impressive context windows, but real-world agent tasks frequently exceed these limits. More critically, model performance often degrades with extremely long contexts, and the cost scales linearly with token count even with caching.

Traditional approaches use context truncation or compression, but these create a fundamental problem: agents need access to all prior state to make optimal decisions, and you can’t predict which historical information might become critical later in the task.

graph TB

A[Agent Context Window] --> B[Critical Recent State]

A --> C[Tool Definitions]

A --> D[Active Task State]

E[File System Memory] --> F[Historical Actions]

E --> G[Large Observations]

E --> H[Intermediate Results]

E --> I[Knowledge Base]

E --> J[Task Plans]

K[Agent Decision Engine] --> A

K --> E

L[Memory Operations]

L --> M[Write Structured Data]

L --> N[Read On Demand]

L --> O[Maintain State]

style E fill:#e8f5e8

style A fill:#fff3e0Implementing File-Based Memory

The file system serves as unlimited, persistent, and directly operable memory for agents. Models learn to write structured information to files and read from them on demand, treating the file system not just as storage, but as externalized working memory.

Restorable Compression: Design compression strategies that preserve the ability to restore information. Web page content can be removed from context as long as URLs are preserved. Document contents can be omitted if file paths remain available. This approach maintains information accessibility while managing context length.

Structured External Memory: Agents learn to create and maintain structured files that serve specific memory functions—task plans, intermediate results, knowledge bases, and historical summaries. This external memory system scales indefinitely and persists across agent sessions.

4. Attention Manipulation Through Recitation

Long agent tasks suffer from attention drift. Critical objectives get buried in growing contexts. The solution? Strategic recitation that keeps important information in recent context.

flowchart TD

A[Initial Task Objective] --> B[Tool Execution Loop]

B --> C[Context Growth]

C --> D[Attention Drift Risk]

E[Recitation Mechanism] --> F[Update Task Status]

F --> G[Restate Objectives]

G --> H[Synthesize Progress]

H --> I[Recent Context Boost]

D --> E

I --> J[Improved Decision Making]

K[Recitation Patterns]

K --> L[Progressive Task Lists]

K --> M[State Summaries]

K --> N[Decision Rationale]

style E fill:#e3f2fd

style I fill:#e3f2fdStrategic Recitation Patterns Effective agents develop patterns of reciting key information into recent context:

Progressive Task Lists: Create and continuously update structured task breakdowns, checking off completed items and highlighting remaining objectives. This keeps the global plan in the model’s immediate attention span.

State Summaries: Periodically synthesize current state and progress into concise summaries that appear in recent context. This provides crucial context compression while maintaining goal alignment.

Decision Rationale: When making complex decisions, have agents explicitly state their reasoning and how it connects to the original objectives. This reinforces goal-directed behavior and provides debugging insights.

5. Embrace Productive Failure

Agents make mistakes—this is a feature, not a bug. Language models hallucinate, external tools fail, environments return errors, and edge cases emerge constantly. In multi-step tasks, failure is part of the natural learning loop.

The instinctive response is to hide these failures: clean up traces, retry actions, or reset state and hope for better results. However, erasing failure removes valuable evidence that enables model adaptation.

graph LR

A[Agent Action] --> B{Success?}

B -->|Yes| C[Continue Task]

B -->|No| D[Error Occurs]

D --> E[Preserve Error Context]

E --> F[Analyze Failure]

F --> G[Update Strategy]

G --> H[Retry with Learning]

I[Traditional Approach]

I --> J[Hide Errors]

J --> K[Reset State]

K --> L[Repeat Mistakes]

style E fill:#e8f5e8

style F fill:#e8f5e8

style J fill:#ffebee

style K fill:#ffebeeLearning from Failure

Preserve Error Context: When actions fail, keep the failure and error messages in context. Models implicitly update their beliefs based on observed failures, reducing the likelihood of repeating the same mistakes.

Error Recovery Patterns: One of the clearest indicators of true agentic behavior is sophisticated error recovery. Models that see their own failures develop better strategies for handling similar situations in the future.

Failure Analysis: In complex tasks, agents can explicitly analyze what went wrong and why, creating valuable learning signals for future decisions. This meta-cognitive approach significantly improves agent robustness.

6. Avoid Few-Shot Traps

Few-shot prompting works great for isolated tasks but backfires in agent systems. Models are pattern matchers—when contexts fill with repetitive action-observation pairs, agents get stuck in loops.

graph TB

A[Few-Shot Examples] --> B[Pattern Recognition]

B --> C[Rigid Behavior]

C --> D[Suboptimal Decisions]

E[Pattern Breaking Strategies] --> F[Structured Variation]

E --> G[Context Diversification]

E --> H[Format Randomization]

F --> I[Flexible Decision Making]

G --> I

H --> I

style C fill:#ffebee

style D fill:#ffebee

style I fill:#e8f5e8Maintaining Decision Diversity

Structured Variation: Introduce controlled randomness in action and observation formatting. Use different serialization templates, vary phrasing, and add minor noise in ordering. This breaks rigid patterns while maintaining clarity.

Context Diversification: When possible, include examples of different approaches and decision-making styles. This helps prevent agents from getting locked into suboptimal patterns.

Pattern Breaking: Actively monitor for repetitive behavior and introduce variation when detected. This can be as simple as changing how information is presented or restructuring similar observations.

The Agent Architecture That Works

Here’s how these principles come together in a production-ready agent architecture:

graph TB

subgraph "Context Layer"

A[Stable System Prompt]

B[Tool Definitions]

C[Task Objectives]

end

subgraph "Dynamic Context"

D[Recent Actions]

E[Current State]

F[Error History]

end

subgraph "Memory Layer"

G[File System]

H[Structured Knowledge]

I[Historical Context]

end

subgraph "Decision Engine"

J[Action Selection]

K[State Management]

L[Cache Optimization]

end

subgraph "Execution Layer"

M[Tool Execution]

N[Error Recovery]

O[Progress Tracking]

end

A --> J

B --> J

C --> J

D --> J

E --> J

F --> J

G --> K

H --> K

I --> K

J --> M

K --> M

L --> M

M --> D

N --> F

O --> E

style J fill:#e3f2fd

style K fill:#e3f2fd

style L fill:#e3f2fdImplementation Best Practices

Session Management and State Persistence

Design clear session boundaries with state persistence strategies. Long-running agents need mechanisms to save and restore context while maintaining cache efficiency.

1. Multi-Agent Coordination

When building systems with multiple specialized agents, context engineering becomes even more critical. Design clear protocols for information sharing and task handoffs.

2. Performance Monitoring

Track the metrics that matter:

- KV-cache hit rates – The most critical performance indicator

- Context length growth patterns – Monitor for runaway context expansion

- Tool selection diversity – Detect pattern traps early

- Error recovery rates – Measure learning from failures

- Attention distribution – Ensure important information stays visible

graph LR

A[Monitoring Dashboard] --> B[Cache Hit Rate]

A --> C[Context Growth]

A --> D[Tool Diversity]

A --> E[Error Recovery]

A --> F[Attention Health]

B --> G{>90%?}

G -->|No| H[Optimize Stability]

G -->|Yes| I[Performance Good]

C --> J{Growing Too Fast?}

J -->|Yes| K[Implement Compression]

J -->|No| L[Growth Normal]

style G fill:#e8f5e8

style I fill:#e8f5e8

style H fill:#fff3e0The Future of Context Engineering

Context engineering represents the next phase of AI development, where the focus shifts from crafting perfect prompts to building systems that manage information flow over time. This approach offers unprecedented flexibility and adaptability.

As language models continue to improve with longer context windows and better attention mechanisms, context engineering will become even more powerful. The patterns and strategies outlined here provide a foundation for building agents that don’t just work—they excel.

Key Takeaways

The path to production-ready AI agents isn’t paved with more powerful models or sophisticated tools. It’s built with smart context engineering:

- Prioritize KV-cache optimization – It’s your most impactful performance lever

- Use masking over removal – Preserve context stability while managing complexity

- Treat file systems as unlimited memory – Scale beyond context window limitations

- Manipulate attention strategically – Keep critical information visible through recitation

- Learn from failures – Error context provides valuable training signals

- Maintain decision diversity – Avoid the few-shot pattern traps

Success in AI agent development comes down to understanding how to orchestrate information flow to create systems greater than the sum of their parts. Master context engineering, and you’ll build agents that don’t just function—they truly excel.

The agentic future will be built one context at a time.…